Recently, 3D Gaussian splatting (3D-GS) has gained popularity in novel-view scene synthesis.

It addresses the challenges of lengthy training times and slow rendering speeds associated with

Neural Radiance Fields (NeRFs). Through rapid, differentiable rasterization of 3D Gaussians,

3D-GS achieves real-time rendering and accelerated training.

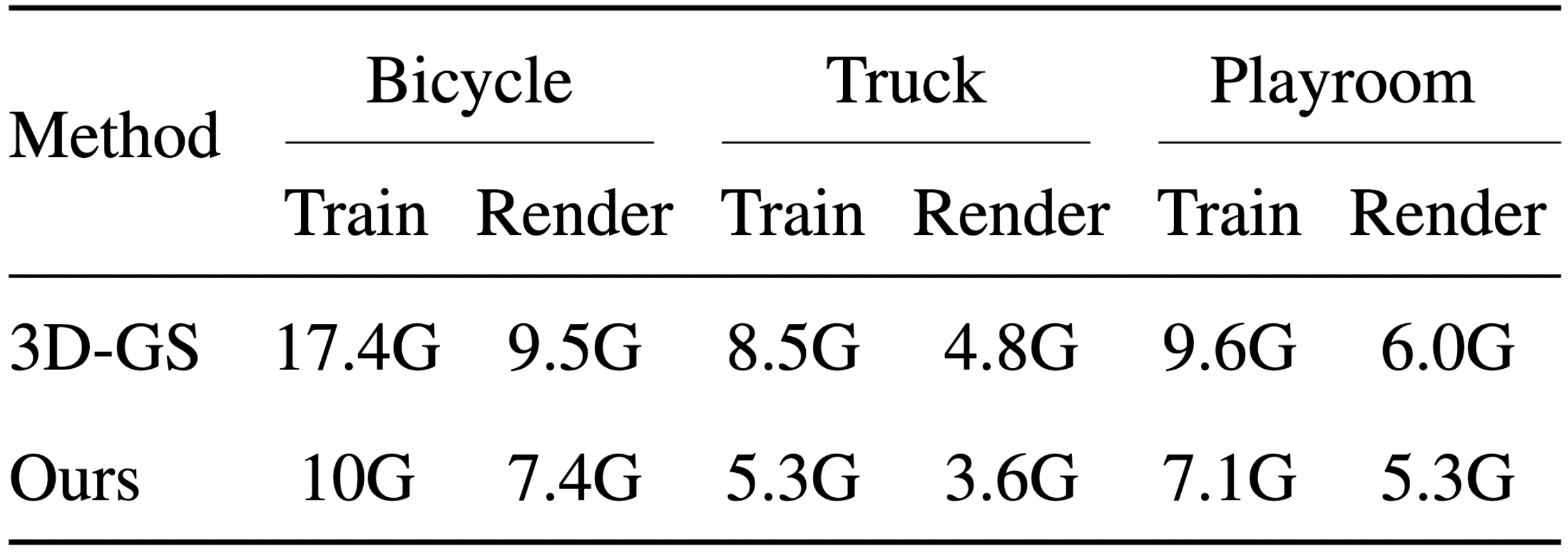

They, however, demand substantial memory resources for both training and storage,

as they require millions of Gaussians in their point cloud representation for each scene.

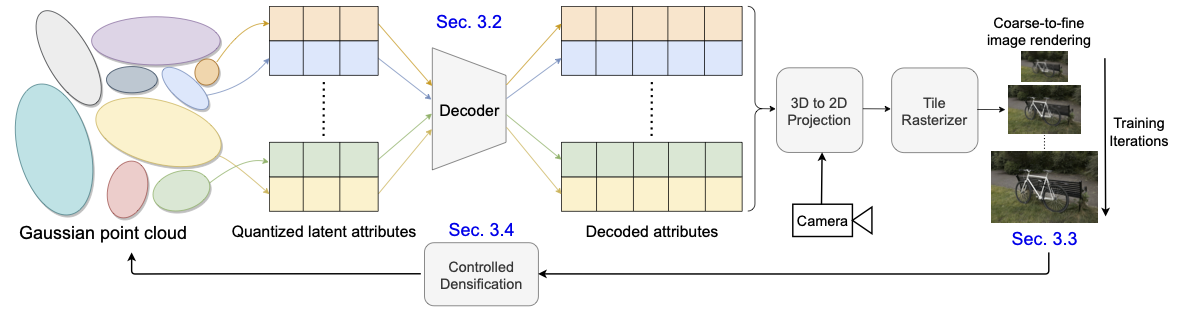

We present a technique utilizing quantized embeddings to significantly reduce memory

storage requirements and a coarse-to-fine training strategy for a faster and more stable

optimization of the Gaussian point clouds. We additionally incorporate a pruning stage

for removing redundant Gaussians. Our approach results in scene representations

with fewer Gaussians and quantized representations, leading to faster training times and

rendering speeds for real-time rendering of high resolution scenes. We reduce memory by

more than an order of magnitude all while maintaining the reconstruction quality.

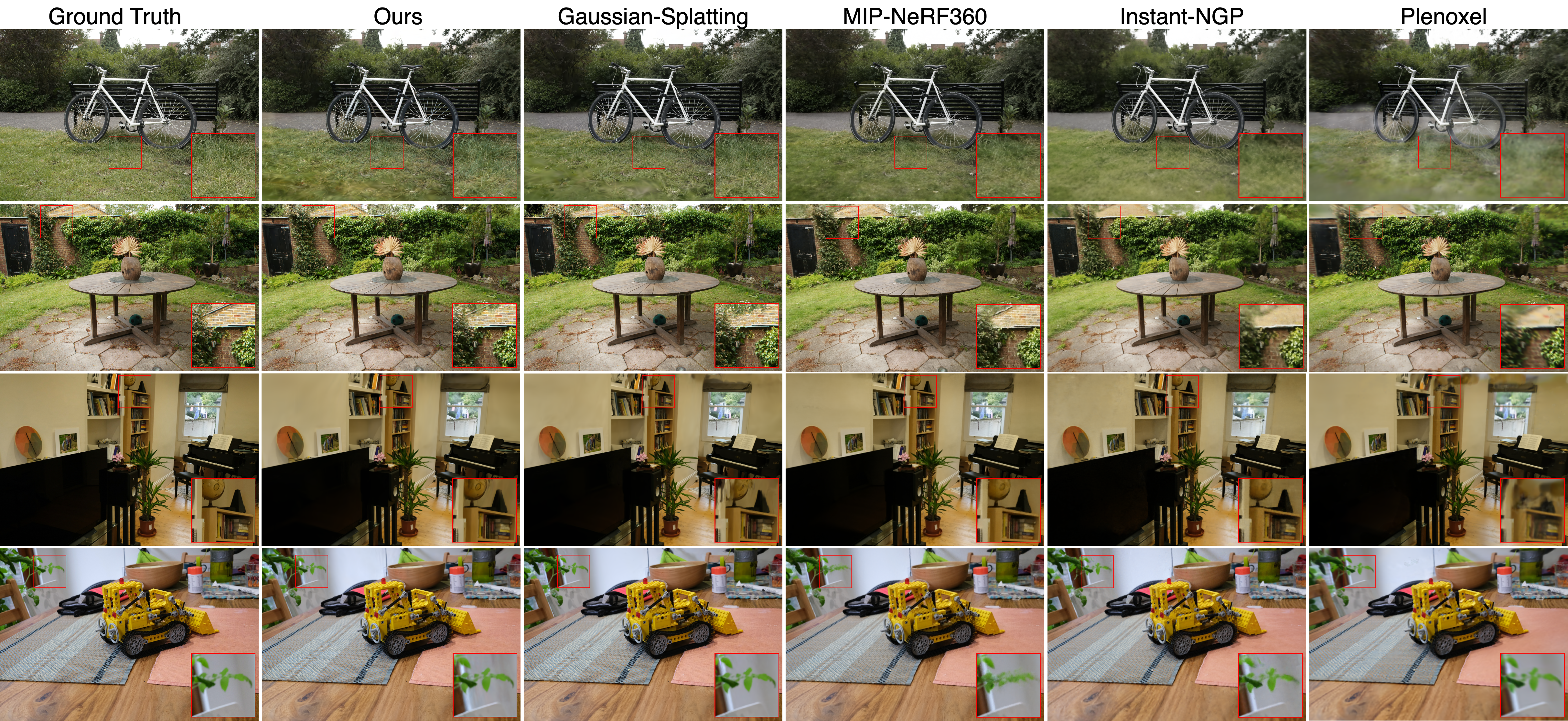

We validate the effectiveness of our approach on a variety of datasets and scenes

preserving the visual quality while consuming 10-20x less memory and faster

training/inference speed.